Introduction

The capacity of a computer programme to comprehend spoken and written human language is known as natural language processing, or NLP. It's a part of AI, or artificial intelligence.

With its origins in the study of languages, NLP has been around for more than 50 years. It has numerous practical uses in a range of industries, such as business intelligence, search engines, and medical research.

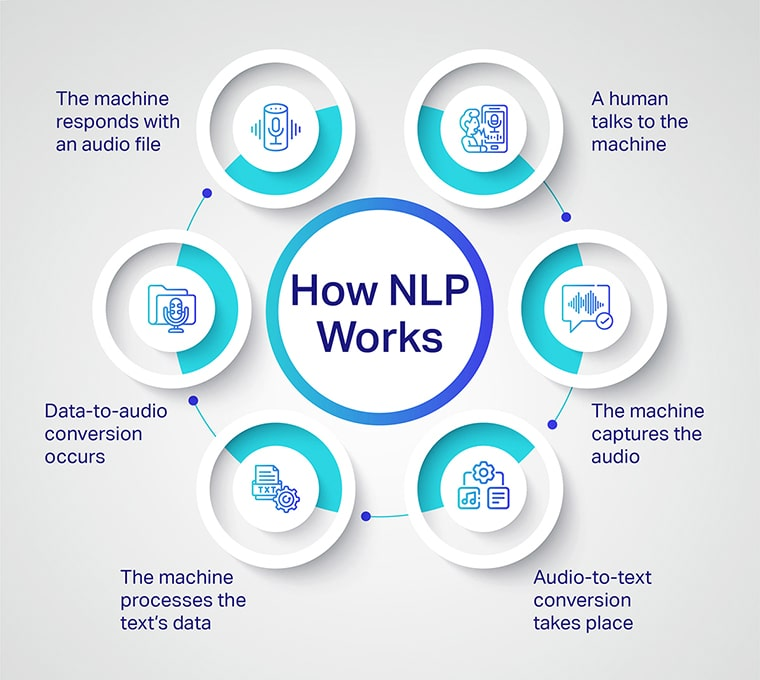

How NLP works?

Computers can comprehend natural language just like people do thanks to NLP. Natural language processing uses artificial intelligence to process and interpret real-world input—spoken or written—in a form that a computer can comprehend.

The two primary stages of natural language processing are algorithm development and data preprocessing.

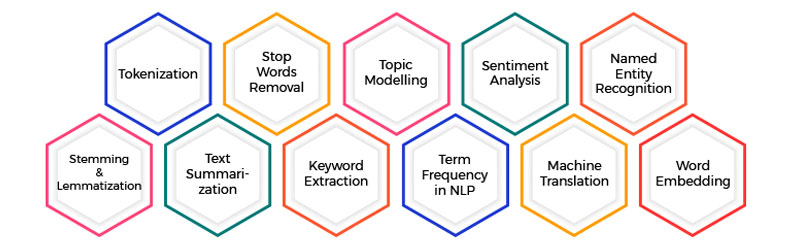

Preparing and "cleaning" text data so that computers can analyse it is known as data preparation. Preprocessing identifies textual elements that an algorithm can use and puts data into a format that is practical. This can be accomplished in a number of ways, including:

• Tokenization:

Text is now divided into manageable chunks to work with.

• Stop word removal:

This is the process of eliminating common terms from a text to leave just the unique words that convey the most information.

• Stemming and lemmatization:

When processing is required, words are boiled down to their most basic forms.

• Part of speech tagging:

This is the marking of words (adjectives, verbs, and nouns) according to the part of speech they belong to.

An algorithm is created to process the data once it has undergone preprocessing. Although there are numerous varieties of natural language processing algorithms, two primary kinds are frequently employed:

System based on rules:

It makes use of language rules that have been carefully crafted. This method is still in use today, having been employed early in the development of natural language processing.

Based on machine learning:

Statistical techniques are used by machine learning algorithms. They are fed training data to help them learn how to execute tasks, and as additional data is processed, they modify their techniques. Through iterative processing and learning, natural language processing algorithms refine their own rules through a combination of machine learning, deep learning, and neural networks.

Techniques and Methods of NLP

Natural language processing uses two primary techniques: syntax and semantic analysis.

The way words are arranged in a phrase to ensure grammatical sense is known as syntax. NLP evaluates a language's meaning using its syntax in accordance with grammatical norms. Techniques for syntax include:

• Parsing:

Parsing is the process of analysing a sentence's grammar. Example: The sentence "The dog barked" is supplied to a natural language processing system. Parsing entails dividing this sentence into its component components of speech, such as dog (noun) and barked (verb). For more difficult downstream processing jobs, this is helpful.

• Word segmentation:

Word Segmentation is the process of extracting word shapes from a text string. Example: A handwritten document is scanned and entered into a computer. After examining the page, the algorithm would be able to determine that there are white spaces between the text.

Semantics is the study of word use and meaning. Algorithms are used in natural language processing to comprehend the structure and meaning of phrases. Techniques in semantics include:

• Word sense disambiguation:

This uses context to determine a word's meaning. For instance: Think about the phrase "The pig is in the pen." There are various meanings for the word pen. This strategy allows an algorithm to comprehend that the term "pen" in this context refers to a fenced-in space rather than a writing instrument.

• Named entity recognition:

The process of named entity recognition identifies words that fit into categories. Example: By employing this technique, an algorithm may scan a news piece for any mentions of a specific business or item. It would be able to distinguish between items that are visually similar by using the text's semantics. For example, the algorithm was able to distinguish between the two instances of "McDonald's" in the sentence, "Daniel McDonald's son went to McDonald's and ordered a Happy Meal," as distinct entities.

Natural Language Toolkit (NLTK), Gensim, and Intel Natural Language Processing Architect are three frequently used tools for natural language processing. An open-source Python module called NLTK includes tutorials and data sets. A Python package called Gensim is used for document indexing and topic modelling. Another Python package for deep learning techniques and topologies is called Intel NLP Architect.

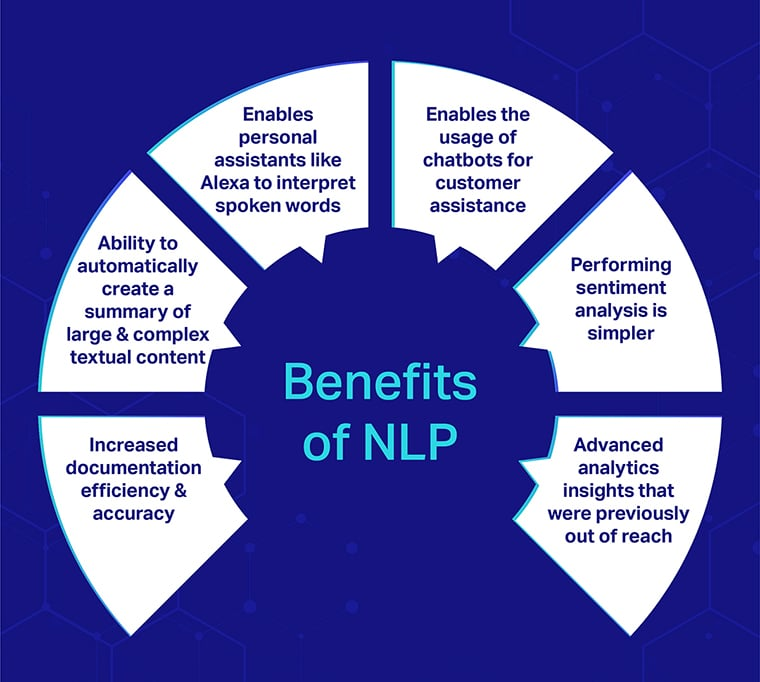

Benefits of NLP

NLP's primary advantage is that it facilitates better communication between computers and people. Code, or the language of computers, is the most direct means of controlling them. Human-computer interaction becomes far more natural when computers are able to comprehend human language.

Other advantages consist of:

• enhanced efficiency and quality of documentation

• capacity to automatically generate a comprehensible synopsis of a longer, more intricate original material

• beneficial for voice-activated personal assistants like Alexa

• makes it possible for a company to deploy chatbots for customer service, makes sentiment analysis simpler

• offers sophisticated analytics insights that were previously impossible to obtain because of data volume.

Conclusion

A crucial role for natural language processing plays in technology and how people use it. Numerous real-world uses, including chatbots, cybersecurity, search engines, and big data analytics, are made of it in both the corporate and consumer domains. It is anticipated that NLP will play a significant role in business and daily life.